Building an Effective Data Integrity Program Using Risk Management

Biopharmaceutical drug contamination has held the limelight for some time. In recent years, data contamination, which manifests as a breach of data integrity, has also moved to the forefront of concerns.

There is an increasing realization that data trustworthiness is crucial to ensure drug products have the desired assurance for quality and patient safety. Consequently, the current regulatory focus includes data contamination along with product contamination. This has driven biopharmaceutical companies to obtain a thorough understanding of data integrity and modify their processes (1). More importantly, this has driven conversation within the industry on the considerations for developing a corporate data integrity program that effectively reduces the propensity for data integrity issues to arise.

What is Data Integrity?

Date integrity is the trustworthiness of data. Regulatory agencies use the attributable, legible, contemporaneous, original, and accurate plus principles (ALCOA+) as the criteria for the integrity of data. If the data cannot fully satisfy the ALCOA+, then it is not trustworthy. Data integrity’s guiding principles include the following:

- The care, custody, and continuous control of data.

- Measures to ensure paper-based and electronic good practice (GxP) data are adequately and securely protected against willful or accidental loss, damage, or unauthorized changes.

- Such measures should ensure the continuous control, integrity, and availability of regulated data.

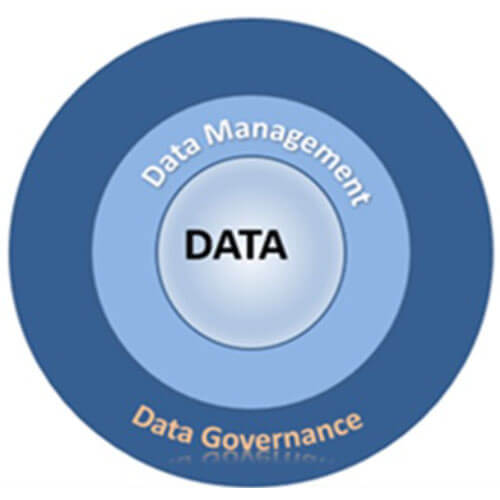

Figure 1 Data Integrity Functional Framework

Figure 1 Data Integrity Functional Framework

A data integrity program has three main components, as shown in Figure 1. At the core is the data to be managed using the company’s data governance and data management policies and procedures.

The Data Management Association (DAMA) defines data governance as “the exercise of authority, control, and shared decision-making over the management of data assets.”

Data management consists of procedures company personnel follow to manage the organization’s data assets.

Why Data Integrity Problems Occur

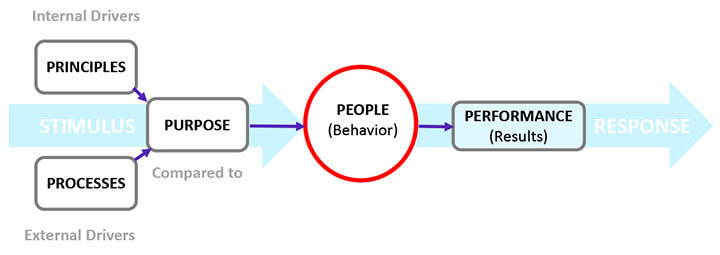

The complex dynamics of organizational entities, such as human actions and business processes and procedures, are the primary reasons for data integrity breaches. These sociotechnical dynamics are delineated in the 5P model for human cognitive behavior in Figure 2.

The 5Ps of data integrity are principles, processes, purpose, people, and performance. An effective data integrity program is designed to align the 5Ps. The primary focus is people since their performance directly impacts data integrity (2).

Figure 2 The 5Ps Influencing Cognitive Behavior

Figure 2 The 5Ps Influencing Cognitive Behavior

Principles are an individual’s core beliefs, guiding philosophies, and attitudes that drive the person’s behavior. They develop during childhood and are shaped by environmental factors. For example, one individual may believe it is wrong to lie and cheat as an absolute rule. In contrast, another may believe that lying and cheating could be condoned situationally, particularly if such behavior safeguards one’s interests. Principles, therefore, lie at the heart of an individual’s behavior. They are internal drivers.

Processes shape a company’s culture. In a process-centric organization, the focus is not only on what needs to be done to manufacture a product but also on the quality of interaction between management and employees. In a company where employees fear losing their jobs for their mistakes or where blame and finger-pointing could be the norm, employees tend to misrepresent events versus document mistakes. Consequently, the data integrity program should empower people and groups in decision-making instead of enforcing a top-down decision-making approach. It should also stress a “management by walking around” mode of operation since it provides a direct one-on-one interaction between management and employees, increasing personal trust and familiarity.

Purpose projects the company’s intention through the articulation of the company’s vision, mission, goals, and objectives. A clear articulation of purpose by executive management in the data integrity plan plays a pivotal role in shaping the behavior of company personnel.

An effective data integrity program should be designed to ensure that employees’ principles do not conflict with the company’s business processes and purpose. For example, the program developers could design a structured interview process for discerning interviewees with core beliefs that contradict the company’s ethics and core beliefs.

In addition to understanding the 5P model of data integrity, it is also critical for the program developers to gain familiarity with the Fraud Triangle, which explains the reasons behind the fraudulent behaviors of groups and organizations.

The Fraud Triangle

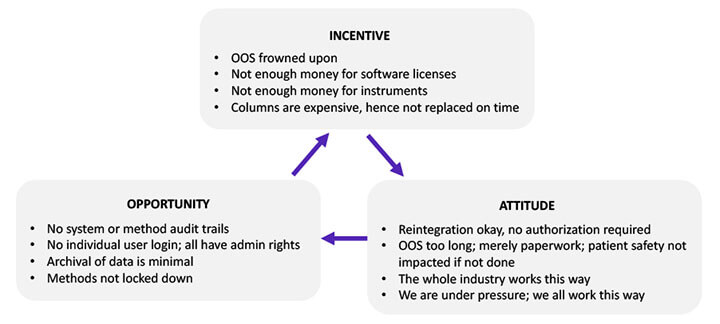

While many sociologists have conducted considerable research on the causal factors associated with fraud and data manipulation, in 2005, sociologist J.T. Wells introduced the fraud framework called The Fraud Triangle, depicted in Figure 3. The items in the triangle have been modified to apply to the biopharmaceutical industry. Fraud occurs when one or more of these causal factors exist:

- Incentive

- Opportunity

- Attitude

Figure 3 The Fraud Triangle

Figure 3 The Fraud Triangle

The incentive component of the Fraud Triangle is exemplified by management’s acquiescence to sharing software licenses to save costs. This reveals management’s tolerance toward behaviors that are detrimental to data integrity. The project team should review the company’s ethics policy and code of conduct to ensure it conveys zero tolerance for such incentive-driven behavior.

The “everybody is doing it” attitude also justifies noncompliant behavior. For example, a laboratory analyst may be tempted to record an actual reading of 3.7 as a reading of 4.0 to avoid a tedious out-of-specification investigation because everybody is doing it. An effective data integrity program must portray management’s unequivocal insistence on honesty and taking pride in it.

The opportunity for committing fraud happens when there is no mechanism to detect the fraud. For example, in older software versions, operators could switch audit trails off and on at will. This created an opportunity to perform actions that would not be recorded. Modern software inhibits such manipulation. An effective data integrity program should force the replacement or upgrade of older equipment with newer ones that have the requisite technical controls.

The next step is exploring the controls that need to be in place to mitigate recurring issues with data integrity.

Data Integrity Controls Triad

Notwithstanding the impossibility of eliminating all data integrity vulnerabilities, controls should be established to reduce the propensity for data integrity errors. Such controls consist of the following control triad components (3):

- Management controls

- Procedural controls

- Technical controls

Management controls address the people and business factors of data integrity. They describe how individuals and groups within an organization are directed to perform certain actions while avoiding others to ensure the integrity of the data. These controls are enumerated in the company’s ethics policy, code of conduct directive, data governance program, and other guidelines. The three key areas that management controls should address are:

- Establishing a company culture

- Defining protocols for the care and control of data

- Information and communication

Establishing company culture is a management control function. It is realized through the management’s establishment of policy on company ethics and code of conduct. Whereas ethics provide a set of principles that set the tone for employee mindset and decision-making, the code of conduct contains specific rules for employee actions and behaviors.

Defining protocols for the care and control of data is also a management control function captured in a data governance program. It includes data ownership, data stewardship, the roles and responsibilities of different groups, and more.

Information and communication controls are management’s commitment to encouraging all to communicate data integrity failures to pre-empt data integrity occurrences elsewhere. This may be accomplished using companywide regularly scheduled reporting mechanisms of data integrity failures.

Procedural Controls and Technical Controls

Procedural controls are guidelines that require or advise people to act in specific ways to preserve data integrity. They are described in the company’s standard operating procedures (SOPs). These SOPs should be established using stakeholders’ input. Doing so gives them a sense of ownership, which is necessary for successful implementation. Table 1 is a non-exhaustive list of SOPs that correspond to data integrity predicate rules enumerated in the U.S. FDA’s data integrity guidance (4).

Table 1 Integrity Regulations and Their Corresponding SOPs| DI Regulation | Regulation Summary Description | SOPs |

|---|---|---|

| § 211.68 | Backup data are exact, complete, and secure, calibration and validation | Validation, calibration, good documentation practices, access control, and audit trail review |

| § 212.110(b) | Data stored to prevent deterioration or loss | Data backup and recovery, business continuity, and data migration archiving |

| § 211.100 | Written procedures for production and process control | Recipe procedures, and production procedures |

| § 211.160 | Laboratory controls are scientifically sound and contemporaneous | Laboratory controls, sampling, validation, calibration, GDocP, and out-of-specification |

| § 211.180 | Original records be retained | Raw data and metadata management |

| § 211.188 | Maintenance of complete batch records | Batch record specifications |

| § 211.192 | Production records review, and review of laboratory records | Audit trail review, and batch/production record review |

| § 211.194 | Records be checked and verified | Audit trail review, and batch record review |

In addition to SOPs that fulfill the predicate rules mentioned in the table above, the project team should ensure that the program establishes SOPs that satisfy the ALCOA+ principles. Table 2 is a non-exhaustive list of directives for the respective ALCOA+ principles.

| Data integrity principle | Directive/SOP |

|---|---|

| Attributable | Access control, audit trail design, date and time |

| Legible | Good documentation practices |

| Contemporaneous | Date and time, and good documentation practices |

| Original | Raw data and metadata retention |

| Accurate | Calibration, laboratory controls, change control, deviation and incident management, validation, and out-of-specification |

| Complete | Laboratory controls, good documentation practices, and manual data entry |

| Consistent | Validation, audit trail review, software development lifecycle (SDLC), GxP records management, manual integration guidance, and laboratory controls |

| Enduring | Data backup and recovery, audit trail design, building monitoring system design, and data migration and archiving |

| Available | Data backup and recovery, data archiving, and building monitoring system design |

Technical controls use technology-based contrivances to protect information systems from harm. These include individualized, role-based access for computerized systems, network protocols, firewalls and intrusion detection systems, encryption technology, and network traffic flow regulators. When used together, these controls provide a layered defense protecting data from integrity breaches.

Whereas procedural control is primarily applied to practices and procedures during the data’s lifecycle, technical controls are designed into products to preserve data integrity. The digitization and use of digital tools such as a validation lifecycle management system provide the technical controls to inhibit unauthorized manipulation of test outcomes such as date and time, name of the tester, and capture of raw data to detect manipulation of results, among many others.

How to Set Up a Data Integrity Program

Now that we understand why data integrity problems occur, we are better equipped to set up the data integrity program. The data integrity program advances through four phases, with each phase consisting of one or more stages (as depicted in Figure 4):

- Plan

- Develop

- Deploy

- Monitor

Figure 4 Phases and Stages of a Data Integrity Program

Figure 4 Phases and Stages of a Data Integrity Program 1. Plan phase

Designate sponsor: During this stage, a project sponsor member of the CEO’s executive team is designated. This activity is key to a program’s success. The sponsor serves as the conduit between the project team and executive management.

Forming the core team: Activities during this stage include the project core team formation by the project sponsor. The core team should consist of the project manager and management-level members from the quality assurance (QA) and information technology (IT) departments, at a minimum. The project sponsor also serves as the chair for the weekly core team meetings.

Forming the project team: The core team then proceeds to form the project team, which consists of members from stakeholder groups. This includes external consultants who are data integrity subject matter experts (SMEs). The SMEs’ role should include training the project team in data integrity and attending weekly project team meetings, at a minimum. Data integrity SMEs provide a degree of assurance for the program’s success.

2. Developing phase

Data integrity audit: This is the discovery stage of the project. The project team, along with equipment users, conducts Gemba Walks. These walks involve physically visiting manufacturing floor equipment to understand the features that meet the data integrity expectations, such as individualized access control, audit trail capability, communication network connectivity, and backup capability (5). These walks also identify business processes during equipment use that provide opportunities for data breaches and misuse. It is recommended that the team seek answers to a predetermined set of questions and capture results in a template for consistency.

Develop a prioritized plan: This stage involves conducting a risk assessment of data integrity issues and vulnerabilities discovered during Gemba Walks. Risk assessment scores serve as the basis for prioritizing their remediation. It is essential to prioritize technical controls over procedural controls. Some regulatory agencies may temporarily accept procedural controls that involve having a notebook located near systems that use a common password for system access. Users manually record their login and logout details in the notebook when accessing the system. This procedure is error prone. The project plan must prioritize upgrading common password access systems with individualized access.

The plan must provide a clear roadmap for deliverables such as data integrity-related SOPs, team roles and responsibilities, and system upgrades or replacements. Once the plan is firmed up, the project team uses it to develop a budget. It is advisable to prioritize budget allocation to mitigate items with higher risk scores. Regulatory agencies are known to support such an incremental approach, provided they see the roadmap is advanced during each round of their audit. It is the project sponsor’s role to obtain budget approval from executive management.

3. Deploy phase

The deploy and train stage consists of several activities. These activities are mini projects performed by several breakout implementation teams. Consequently, the activities of this stage may run in parallel. They may include procuring new systems, upgrading equipment, developing new data integrity SOPs, modifying existing SOPs as applicable, and user training. After completing these mini projects, the systems are deployed after certifying them “fit for use” by the company’s QA group.

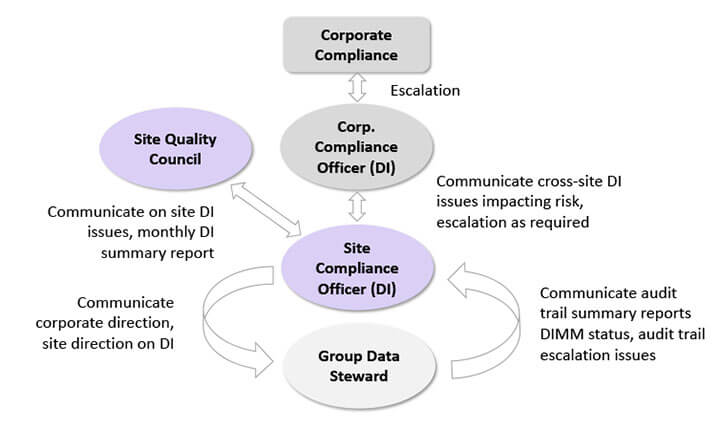

With help and guidance from the project sponsor, the project team should ensure an effective data governance management structure is set up, as depicted in Figure 5 below.

Figure 5 Data Governance Setup

Figure 5 Data Governance Setup

Functional departments have designated data stewards who ensure data integrity practices and procedures are followed. They are also tasked with gathering data integrity issues and bringing them to weekly meetings hosted by the site compliance officer (SCO). The SCO communicates corporate directions and decisions on data integrity to the data stewards and escalates data integrity issues from the data stewards to the corporate compliance officer (CCO). The CCO, like the SCO, has a dual role and serves as a data conduit between the SCOs and corporate compliance. This structure enables data integrity information to flow across the layers of a company’s management structure. Smaller companies may collapse the management structure by eliminating intermediate reporting layers, resulting in the SCO reporting issues directly to the CEO and executive management team.

4. Monitor phase

The monitor and revise stage activities are designed to obtain better transparency and visibility into the data integrity program and provide corrections or revisions to improve the program’s effectiveness. Since data integrity is a measure of the ability of the company’s business process to inhibit data integrity vulnerabilities, monitoring key performance indicators (KPIs) is necessary. These KPIs should be regularly published to promote awareness of data integrity.

Reviews of data integrity-related 483s, warning letters, and corrective and preventive actions (CAPAs) could also provide input to revise the program. To demonstrate continual improvement, set a schedule to review your data integrity plan every six months.

Monitoring for Effectiveness – the Data Integrity Maturity model

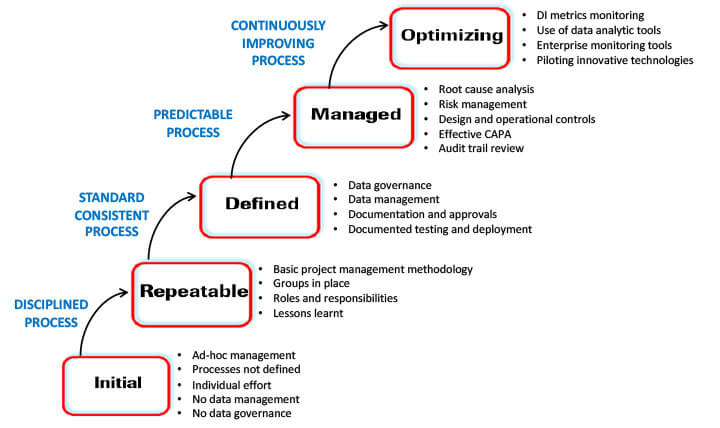

The data integrity maturity model (DIMM) provides a consistent method of scoring the effectiveness of the data integrity program. The model guides the company in pursuing a continual improvement strategy to climb the maturity scale.

When internal auditors use the data integrity maturity model to place the auditee department at a certain maturity level, it provides the department with a consistent measure of what needs to be done to climb the maturity ladder. Figure 6 below enumerates the data integrity maturity model.

Figure 6 The Data Integrity Maturity Model

Figure 6 The Data Integrity Maturity Model

- At the chaotic initial or ground level, processes are ad hoc and ill-defined, with no data governance and a heavy dependence on individuals.

- As processes become disciplined using tools such as project management, more precise team roles and responsibilities are defined, and lessons learned from past projects are utilized for future ones, the maturity level progresses to repeatable.

- As processes standardize, the company reaches the defined level. At this level, there is good data governance and management, documentation and approval processes, and responsible, accountable, consulted, and informed (RACI) charts, tracking every function, including testing and deployment.

- As processes get predictable, the company moves to the maturity level classified as managed. At this level, the company has an effective risk management program, a risk control strategy, and consistent and documented audit trail reviews.

- Optimizing is the highest data integrity maturity level. At this level, the company is in a continual improvement mode.

Conclusion

The rise in data integrity warning letters and Form 483 citations is forcing companies to obtain a comprehensive understanding of data integrity (6). Regulatory agencies are actively hiring personnel who are familiar with the intricacies of electronic data. Pharmaceutical industry management must ensure an adequate budget for personnel with the right blend of IT and compliance expertise and task them with the design, development, and implementation of data integrity programs. The program developers should obtain a good understanding of the sociotechnical aspects of the company that impact data integrity. They also need to consider the controls triad required to protect data from assaults on its integrity. Most of all, the designers should design a program that will transform the company into a process-centric company, where the focus is not merely confined to processes and procedures but to associated elements, including people, equipment, and leadership through management by walking.

Reference

- The 5P Model for Data Integrity, by Chinmoy Roy, 2018. IVT Jr GXP Compliance Vol 22 Issue 5. http://www.ivtnetwork.com/article/5p-model-data-integrity

- The 5P Model developed by Pryor, Toombs, and White who studied under Dr. W. Edwards Deming, Dr. Joseph Juran, and other well-known experts.

- The Data integrity Triad by Chinmoy Roy and Arjun Guha Thakurta, 03/20/2017 Express Pharma, https://www.expresspharma.in/the-data-integrity-triad/

- Data Integrity and Compliance with Drug CGMP, Questions and Answers, Guidance for Industry, U.S. Department of Health and Human Services, Food and Drug Administration, December 2018

- Conducting Data Integrity Audits: A Quick Guide by Chinmoy Roy 9/11/2019 https://www.thefdagroup.com/blog/conducting-data-integrity-audits-a-quick-guide

- The Data Integrity Triad by Chinmoy Roy, 9/28/2017. The Data Integrity Triad: A Framework for FDA-Regulated Manufacturers (thefdagroup.com)

Chinmoy Roy is a biopharmaceutical consultant with over 38 years of experience in computerized systems validation, data integrity,

Chinmoy Roy is a biopharmaceutical consultant with over 38 years of experience in computerized systems validation, data integrity,